We strongly encourage you to use the COPY command to load large amounts of data. Try to insert new data in batches (large ones if possible) and use the COPY statement to do it. This one is a pretty common best practice when dealing with large amounts of data.

Keep your writes batched - Use COPY (batches) instead of inserts A good way to do that is to insert data in a staging table, where it can be modified, before being moved to the master table.

VACUUM ANALYZE REDSHIFT UPDATE

For those reasons, the best thing is to do as little UPDATE as possible. They take a lot of CPU to process, unsort the afftected rows and, are usually pretty long to complete.

UPDATE statements are really not the strong suit of Redshift. Keep your data clean - No updates if possible For us, the sweet spot was under 75% of disk used. Be sure to keep enough space on disk so those queries can complete successfully. In Redshift, when scanning a lot of data or when running in a WLM queue with a small amount of memory, some queries might need to use the disk. Keep enough space to run queries - Disk space Keep in mind that the total concurrency of the cluster cannot be greater than 25.

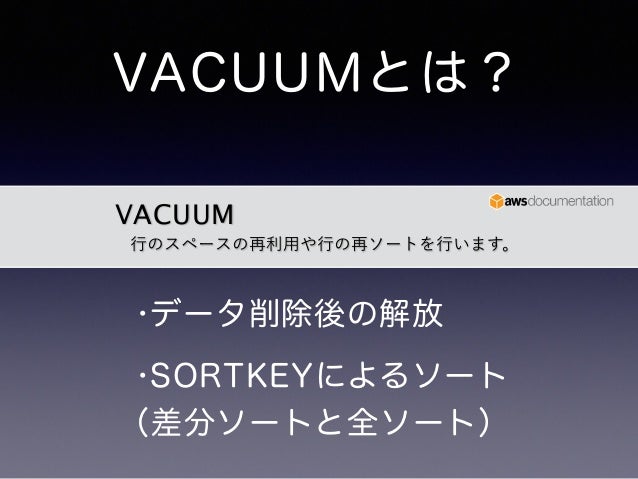

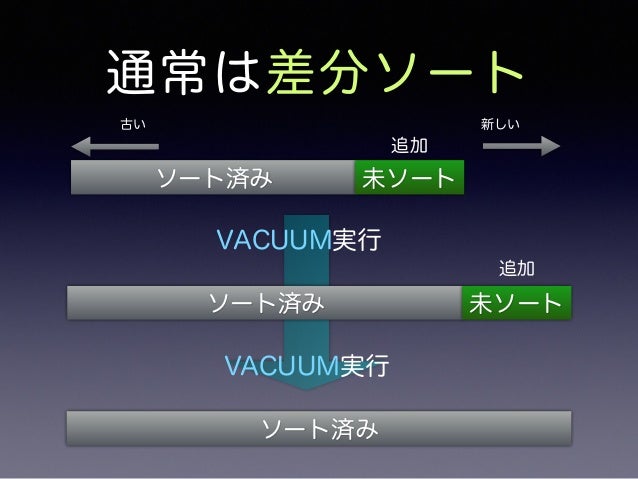

Insertion queue with a 15 minutes timeout, concurrency of 3 and 5% of the cluster’s memory.Maintenance queue with a 1 hour timeout, concurrency of 3 and 5% of the cluster’s memory.Reporting queue with a 2 minutes timeout, concurrency of 15 and 90% of the cluster’s memory.Here is what our configuration looks like: Data insertion and long running maintenance queries are less important. In our case, we try to minimize the response time of reporting queries. Be sure to adapt the WLM queues configuration to fit your use case. It allows you to assign more memory for critical queries, how many queries can run concurrently, set a higher timeout for longer queries, etc. Workload Management (WLM) queue configuration is very important for query performance. Keep spots to run important queries - WLM queues setup VACUUMs will be a lot faster if your data is inserted in sort key order.Ĭheck the documentation about vacuuming tables and analyzing tables (it was greatly improved over the years and is now pretty neat).This means that, at some point when you have a lot of data, cleanup operations might stop running as often as configured.By default, VACUUM will not sort if 95% of the rows in a table are already sorted and ANALYZE will be skipped for any table with less than 10% of changed rows. More often than that had negative performance impacts. From what we experienced, once a week is enough and once a day is optimal.Depending on your use case, you may not need to run those operations often.VACUUMs are heavy on both CPU and disk space, so it’s better to run it during a period of low usage.Keep those things in mind when running those operations: remove deleted rows to reclaim space and sort the newly inserted data and the ANALYZE will update metadata used by the query planner. One way to do that is to run VACUUM and ANALYZE commands. I talked a lot in my last post about the importance of the sort keys and the data being sorted properly in Redshift. Here goes! Keep your custer clean - Vacuum and Analyze Since I’m not one for long blog posts, I decided to keep some for a second post. In my last post, I shared some of the wisdom I gathered over the 4 years I’ve worked with AWS Redshift.

0 kommentar(er)

0 kommentar(er)